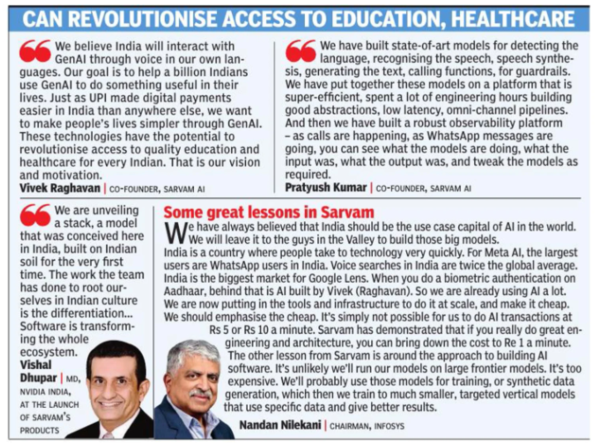

Vivek Raghavan, the co-founder of Sarvam AI, believes the key to unlocking AI’s potential in India lies in developing models that can understand and communicate in the country’s many regional languages through voice interfaces.

“Indians will interact with generative AI through voice in their own language,” Raghavan tells us.

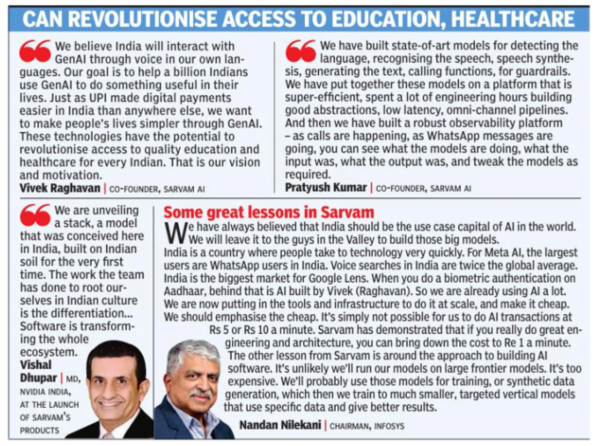

At the heart of Sarvam’s approach is the idea that while massive language models like GPT-4o and Gemini 1.5 offer impressive capabilities, much of what people need can be achieved with far smaller, more efficient models finetuned for specific tasks and linguistic contexts.

“If I want to do something that’s relevant millions of times a day, I can’t use those large models. It’s too expensive and not accurate enough,” Raghavan explains. “For a use case like customer support for a telecom company, I want a smaller, purpose-built model that outperforms bigger models on that task.”

To this end, Sarvam just announced Sarvam 2B, an open-source 2 billion parameter model trained from scratch on trillions of tokens of Indian language data, including synthetically generated text. At just a fraction of the size of models like GPT-4, and at a fraction of its cost, Sarvam 2B promises to deliver superior performance on Indian language tasks like translation, transliteration, and summarisation. And it’s does it for 10 Indian languages.

The company also unveiled “Sarvam Agents” — multilingual, voiceenabled AI assistants that can perform actions like booking tickets or scheduling meetings through telephony, WhatsApp or in-app interfaces. The cost? As low as 1 rupee per minute.

In a demo we saw, a voice AI agent deployed on the phone line of a healthcare customer starts by saying: “Namaste, Sarvam Saathi tak pahunchne ke liye, dhanyavad. Aap ki kya madad kar sakti hoon? (Thank you for reaching out to Sarvam. How can I help you?). Then starts a seamless conversation in Hinglish with a user who has a dental issue. The bot was able to understand even uniquely Indian utterances. There was no latency. If the user interrupted the bot, the bot handled that beautifully. It understood all queries, and it even tually even booked an appointment for the user with a doctor for the preferred date.

Unconventional beginnings

Raghavan’s path to founding Sarvam is unconventional. For 15 years, he worked as a volunteer on India’s massive Aadhaar digital identity project. This experience, he says, gave him the drive to leverage technology for societal impact. “I see a future where every child can get a quality education (via AI), which was not possible before this,” he says, echoing a point made by Indian-American entrepreneur & venture capitalist to TOI earlier this week.

He bumped into the Indian lan guage AI problem over a decade ago when the Supreme Court sought a way to translate judgments into regional languages. This led him to advise the government’s Bhashini initiative – India’s AI-led language translation platform, launched as part of the Digital India vision.

The decision to finally form a for-profit startup, rather than continue in the public or non-profit sector, was driven by the need for speed and scale. “We need to move faster,” Raghavan explains. “This is a space where globally, things are moving very fast.”

Sarvam’s approach reflects Raghavan’s belief in “sovereign AI” — models tailored for Indian contexts that can be deployed on-premises by enterprises concerned about data privacy. It’s also about giving Indian researchers the tools to push the boundaries of language AI.

The company is open-sourcing the audio language model that’s built on top of Meta’s open-source Llama model. “We want the Indian AI ecosystem to make progress,” Raghavan says.

Fundamental innovations

Under the hood, Sarvam has pioneered techniques to reduce the “tokenizer tax” that makes representing Indian language text inefficient in standard models. In AI and ML parlance, a token can represent an entire word or just a single character, Indian languages routinely fall prey to the negative effects of the second category, because the number of tokens it usually takes to represent an Indian language is far higher than say for a language like English. Which is why methods to reduce the tokenizer tax of using an Indian language was important, says Raghavan. Fewer tokens mean a smaller, more efficient model.

The company also embraced synthetic data generation as a way to augment limited real-world datasets for Indian languages. “We’ve built models to generate data and we’re using that data to train models,” Raghavan says. Sarvam’s 2B model was trained on a cluster provided by Indian company Yotta.

Looking ahead, Raghavan sees opportunities to apply generative AI to domains rich in Indian knowledge like Ayurveda, where models could synthesise information from ancient texts into a coherent, referenceable corpus.