Even when the generated text is backed with links, the model could cherry pick information without understanding the context or take poor quality sources. For example, an AI summary concludes cold water is bad for digestion, based on a discussion in a news article. However, the source article says there’s no evidence. Similarly, it provides misleading information about sleep apnea, a health condition. People tend to look only at the summary without diving into detail, especially during emergencies.

Multiple incidents of Google generating harmful content like advising people to add glue to the pizza, eat rocks or claiming Obama is a Muslim have been reported. In one instance, Microsoft’s Copilot amplified misleading information based on a British tabloid. When the question was asked whether someone can reverse ageing, Copilot responded: “Indeed”, citing a link titled ₹Man re turns 10 years younger.’

A few reputed Indian media published this misinformation without fact-checking. This news was further amplified by social media power users in government and entertainment. A Microsoft spokesperson told us they are investigating the report. “We use a mix of human and automated techniques in an effort to improve our experience,” the company said. Google said it’s taking “swift actions” and removing embarrassing queries.

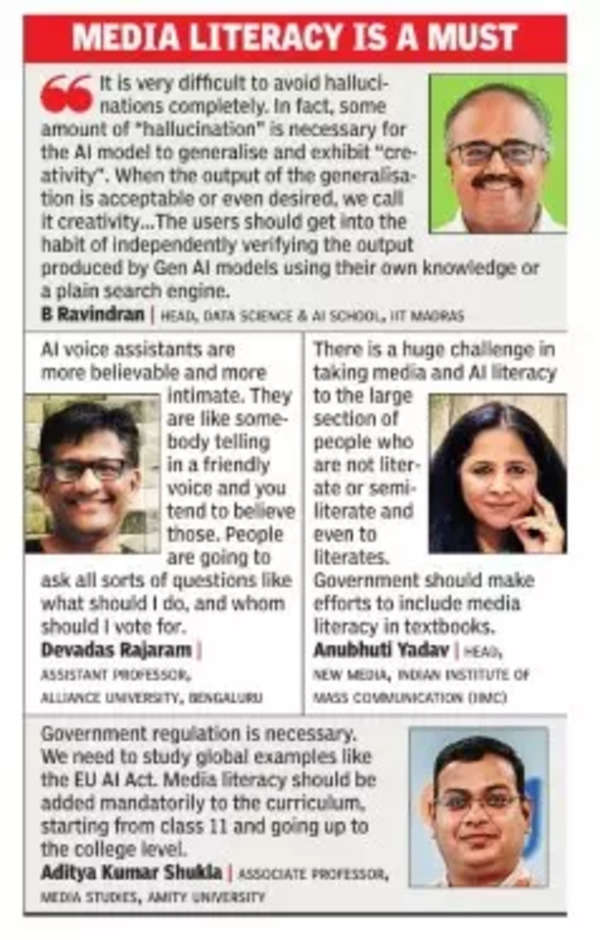

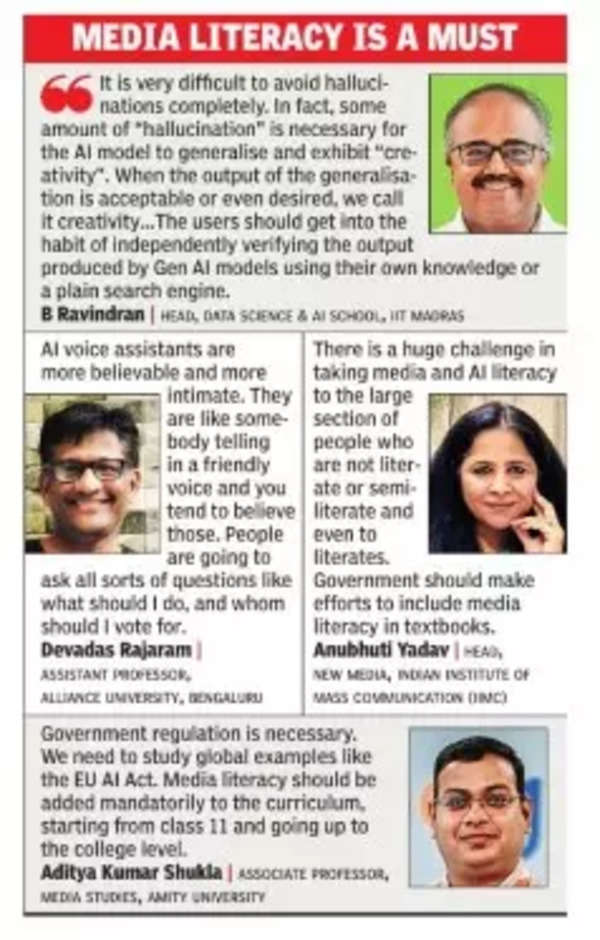

B Ravindran, head of Wadhwani School of Data Science & AI at IIT Madras, says technological solutions like retrieval augmented generation (RAG) and tighter editorial control are necessary to make AI search work. RAG, he says, allows designers to seed trusted documents in the generation. But even this, he says, doesn’t prevent misinformation from being added to the augmentation process.

Aditya Kumar Shukla, associate professor of media studies at Amity University, stresses the need for government regulation, more so since the younger generation is inclined to use these newer tools.

Anubhuti Yadav, head of new media at Indian Institute of Mass Communication (IIMC), expresses concerns about gender and other biases in these models.

Now, with Gen AI platforms like GPT-4o and Gemini enabling voice interfaces, even in vernacular languages, there are worries things could get worse. Devadas Rajaram, journalism educator and assistant professor at Alliance University, says such bots will be more intimate and users will be more easily taken in. They may not even provide sources. Rajaram says AI literacy along with media literacy is a must.